Imagine this scenario that is set in the near future- say in 2023. A regular customer tells you that he had just bought a new mid-level American-made car and was using it for the first time to drop his kids off at school before heading for work. The customer also tells you that he was stationary, but first in line at a red traffic light. The light changed to green for him, but for some reason, the brand new car refused to respond to a throttle input as he tried to move off.

Instead, a loud, blaring alarm went off, his seat belt tightened, all the lights on the dashboard began to flash, and just as he thought he definitely needed to take this up with the dealer, a heavy delivery truck barged through the intersection on a light that was red for the truck. Fortunately, the side road was empty, so the truck had a clear passage through the intersection.

The customer is not only still severely shaken up when he arrives at your workshop; he is also perplexed at the fact that as soon as the speeding truck was clear of the intersection, the alarm in his car turned off, the dashboard lights went out, and the car again responded normally to all control inputs. Everything now seemed to work normally again, but “would you mind taking a look at the vehicle”, just in case it happens again that multiple electronic systems choose to fail at a critical moment, even though the failures saved his life?

What would your reaction be if a customer told you a story like this? Would you agree to “take a look” while not being sure what you’d be looking for? On the other hand, would you tell the customer that his new car was designed to ignore throttle inputs in some situations, and therefore, his new car had just saved his and his children’s lives because one or more advanced safety systems had functioned as intended?

Of course, cars don’t save the lives of their occupants intentionally but from the perspective of a driver who was just saved from an almost inevitable accident it might appear that way. However, from our perspective as technicians, and especially those among us who do not have a clear understanding of the working principles of the latest developments in ADAS systems, it might appear as if some cars sometimes develop "minds of their own" when they assume control over one or more control functions in some situations. In this article, then, we take a closer look at new developments in advanced fatigue detection and collision avoidance systems in terms of what they do, and how they do it, starting with a look at-

Image source: Intel / Mobileye

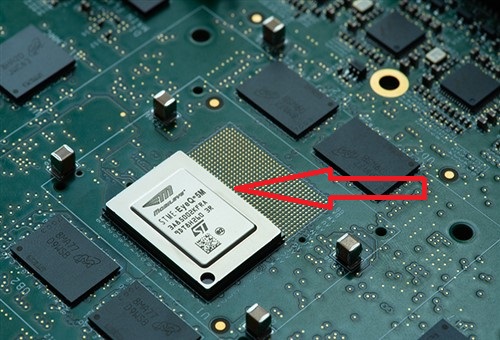

This image shows the placement of a Mobileye chip on the circuit board of an automotive control module that controls several ADAS systems on a late model passenger vehicle. Made by Intel, the same company that manufactures a wide range of microprocessors for an even wider range of industries, this particular chip is programmed with Intel's proprietary Mobileye EyeQ software. The technical details of this software fall outside the scope of this article but suffice to say that it consists in large part of AI (Artificial Intelligence) derived algorithms whose primary functions include the detection of obstacles, and preventing an equipped vehicle from colliding with said objects.

As a practical matter, EyeQ technology is now being used by at least 27 OEM vehicle manufacturers and various iterations of is fitted to at least 40 million vehicles worldwide. We need not delve into the history and development of this technology here, beyond saying that in its current state of development, it is widely recognised by car manufacturers not only as a precursor to fully autonomous control software but also as a viable foundation on which to build fully autonomous vehicles on commercially viable scales.

While earlier iterations of EyeQ technology, or to use its proper name, “EyeQ vision-processor System-on-Chip” have been in use for several years, the combination of greatly improved sensing devices like long-range radar and lidar transponders, and improved AI-based software means that the latest iterations of the technology operate at efficiencies that were impossible to achieve just a few years ago.

For instance, the latest version can not only detect and interpret road signs, including speed limit signs correctly at much greater distances than before; it can also reliably distinguish between stationary and moving pedestrians, as well as consistently tell the difference between bicycles and motorcycles- none of which was possible just five years ago. As an added bonus, current EyeQ technology also supports some of the currently available fully autonomous/self-driving control systems, the least of which is autonomous control of the high and low headlight beams.

So what does this mean in practice, given that systems such as camera-based AEB (Autonomous Emergency Braking) have been in use for at least ten years already, and might soon become legally mandated in several automotive markets? It simply means this: there is no comparison between autonomous emergency braking as we know and understand it, and the high level of integration that is now taking place between EyeQ technology, and the number of new and next-level ADAS systems now coming into widespread use around the world.

Before we get to the specifics of the latest collision avoidance systems, though, it is perhaps worth noting that Mobileye also supplies a stand-alone EyeQ module, marketed under the name, “ Mobileye 8 Connect”, that can be retrofitted to almost any vehicle, (largely) regardless of its age, size, or purpose. Although the Mobileye 8 Connect system has limited functionality, it is highly effective in detecting obstacles, and several million systems have been fitted to heavy trucks, buses, and other commercial vehicles worldwide, which brings us to-

Image source: Bosch

This image shows the bulbous "eye" of a fourth-generation Bosch radar transponder mounted behind the grille of a 2020 GM product. Observant readers will notice that instead of the radar unit transmitting signals through a grille badge or similar cosmetic feature, thus unit is brought out into the open, so to speak, to improve the unit's field of vision. Bear in mind that in earlier iterations of autonomous emergency braking systems, the radar unit "looked" straight ahead in a very narrow cone to detect obstacles either directly in front of the vehicle, or perhaps in adjacent lanes. When these systems detected an object in the vehicle's way*, a control module calculated the distance to the object, and based on this information and the vehicles' speed, as well as the relative speeds (if the object was moving), between the equipped vehicle and the object, the control module may or may not decide to initiate an emergency braking event.

* Assuming, of course, that the system was fully functional and properly calibrated, the older systems could initiate an emergency braking event before a human driver could recognise that a potentially hazardous situation existed or was developing.

However, the biggest drawback earlier iterations of autonomous emergency braking systems had was that they could not differentiate between different classes of obstacles, on the one hand, and that their effectiveness was greatly reduced by poor visibility caused by heavy rain or snow, on the other.

Fourth-generation radar systems are not affected by these issues, but more importantly, their wide-angle vision now allows the system to monitor traffic and other obstacles such as motorcycles and trucks emerging from side streets, such as happened during our example scenario at the beginning of this article. In this example, the EyeQ system not only detected the truck approaching the intersection, but it also detected that the truck was not slowing down, which resulted in the larger collision avoidance system disabling the throttle, and triggering several alarms to prevent the driver from moving into the approaching truck's path.

One car manufacturer is taking the vastly improved capabilities of fourth-generation collision avoidance systems to new levels to minimise the typical injuries pedestrians (and front-seat vehicle occupants) suffer when they are hit by cars. Consider the image below-

Image source: Volvo

For this application, Volvo engineers had developed a set of algorithms that can detect when the vehicle hits a pedestrian, as opposed to the vehicle hitting any other solid object. The details of these algorithms are proprietary to Volvo, but suffice to say that they are reported to have delivered consistently accurate and reliable results during an extensive test regime.

Nonetheless, when the vehicle hits a pedestrian, the pedestrian protection system fires pyrotechnic devices to sever the bonnets' attachment points at the windscreen side- the normal bonnet latch at the front serving to anchor the bonnet to the vehicle to prevent it flying off when its rear attachment points are severed. Next, the system activates an airbag that both covers most of the windscreen, and lifts the back end of the bonnet about 110mm above its normal position.

The purpose of lifting the bonnet is to provide room between it and the engine to deform under the impact with the pedestrian, acting as a kind of shock absorber, as it were. The purpose of the airbag is to both soften the hapless pedestrian's contact with the windscreen and to prevent the pedestrian from crashing through the windscreen into the passenger compartment.

However, Volvo’s engineers recognized the possibility that when a vehicle hits a pedestrian at speeds above about 50 km/h, the sloping bonnet might deflect the victim over the vehicle’s roof to land right in front of following vehicles. To prevent this, the bonnet is designed to collapse deep into the engine compartment to absorb as much of the impact forces as possible.

In the real world, many accidents involving pedestrians are unavoidable, and because of this, there is no doubt that all major car manufacturers will design and implement similar pedestrian protection systems in the next few years. However, all of us have lived through moments where we narrowly missed hitting pedestrians, sometimes through no fault of ours, but if the truth were told, some of these near-missed happened when we were not as alert as we might have been, which brings us to-

Image source: Pixabay

There are many reasons why drivers become inattentive, and several studies have found that being fatigued is the most common, and especially in markets where drivers have long daily commutes. Studies have also shown that in markets with large vehicle populations, about 22% of fatal accidents, 14% of accidents with serious injuries sustained by all vehicle occupants, and 7% of all accidents involve driver fatigue as the primary cause.

Moreover, research in the American and European Union has identified male drivers under the age of 30 as the most likely to be involved in an accident involving driver fatigue, with drivers in the 21 to 25-year-old age bracket following in a close second place.

In the Australian context, however, driver fatigue is the third most common cause of all car accidents, following behind excessive speed and alcohol consumption. Nonetheless, driver fatigue (as the primary cause) has accounted for an average of 10% of all types of car crashes in Australia between 2016 and 2020, with most of these accidents occurring between 10 PM and dawn.

Statistics aside though, fatigue has the same effect(s) on the human body as alcohol intoxication, with reduced reaction times, decreased ability to concentrate, poor decision making, and an overestimation of one's driving skills being the most common manifestations of driver fatigue. Thus, let us look at some of the most recent developments in AI-derived driver fatigue warning systems that are now being implemented by some major car manufacturers, starting with-

Steering pattern monitoring

Steering pattern-monitoring uses AI to recognise when a driver’s steering inputs deviate from a pattern of inputs the system has come to accept as “normal”. In practice, the system logs all steering inputs via the steering angle sensor and relates them to the vehicle’s road speed, engine speed, throttle position, yaw sensors, accelerometers, and other sensors such as lane departure warning cameras to construct a kind of database of “normal” and acceptable steering inputs.

While this kind of system does use information from the lane departure warning system, this information is not the primary source of information. As a practical matter, the system monitors both the number of steering inputs over a given time and the severity of steering inputs over the same period to calculate the degree of deviation from what the system considers a normal pattern of steering inputs.

Thus, when the system deems a detected deviation from the normal steering pattern to exceed a maximum allowable threshold, it will trigger either (or both) audio and visual alarms to inform the driver that he is a danger to both himself and other road users.

Lane monitoring

These systems typically use existing equipment that monitors a vehicles’ position in a driving lane, but with AI-based software that counts the number of lane departure warnings the system logs in a fixed period. Based on this information, the system can calculate the probability that the driver is fatigued and is driving erratically because of lapses in concentration and alertness.

If the system deems the driver fatigued, it will trigger audible and/or visual alarms to inform the driver that he needs to take a break from driving.

Face and/or eye movement monitoring

This kind of system is perhaps the most interesting from a purely technical perspective because it uses a built-in database of millions of images of people in various stages of tiredness, fatigue, or exhaustion. The technical details of these systems are too complex to explain here, but in essence, the system uses a camera to monitor not only the driver's face, but also his eye movements, head rotation, frequency of yawning, eye-blinking frequency, and the duration of blinks, as well as eye direction.

Based on this data, the system can reliably detect fatigue in any driver, and it will issue appropriate warnings and alarms when required, and especially in cases where it detects a condition known as “hypo vigilance”. This condition involves a state of enhanced or exaggerated sensitivity to sensory inputs coupled with a sense of anxiety, which is usually a precursor condition to acute exhaustion. Typical indicators of hypo vigilance include increased yawning frequency, rapid eye movements, reduced blinking rates, and a lack of head rotations.

Physiological measurements

One other method to detect fatigue and reduced alertness in drivers that is now undergoing testing and evaluation involves physiological changes in the body, such as changes in the skin’s electrical conductivity, changing or fluctuating heartbeats, and muscle contractions.

While these methods are vastly more accurate than face or eye monitoring, the devices such as heart rate monitors and electrodes to measure brain activity that is required to take the measurements are currently intrusive and impractical. Nonetheless, several car manufacturers are currently working with medical researchers and design institutes to develop sensors that can be placed in steering wheels and seats to monitor physiological parameters unobtrusively.

While some manufacturers are now implementing one of the fatigue detection systems described above as a stand-alone system, many manufacturers are choosing to integrate one of the above systems into one or more other advanced ADAS systems, to reduce so-called "false positives" from any single system.

For instance, combining an excessive number of large, erratic steering inputs with both repeated wandering into adjacent lanes, and reduced eye-blinking rates is more likely to yield an accurate and reliable diagnosis of driver fatigue than the results of any single system in isolation would, which begs this question-

Image source: Autel

While dealerships will have no choice other than to invest in the tools and equipment the calibration of these new systems demand, things might be a little more problematic for us in the independent industry- even after the Mandatory Data Sharing Law comes into operation.

Getting the technical information from dealerships and manufacturers is one thing, but buying the tools and equipment for each brand and model is certain to be prohibitively expensive for many independent workshops, even if they have the space available for ADAS calibration procedures.

However, Autel, a leading manufacturer of diagnostic and test equipment, has released a tool that contains a comprehensive database of technical information, calibration requirements/procedures, and step-by-step instructions on how to perform both ADAS diagnostic and calibration procedures on all major vehicle brands and most models, including information on the new, next-level ADAS systems. Standalone software packages are also available to upgrade some other Autel tools to achieve the same functionality; visit this resource for more information.

There is no doubt that the proliferation of advanced ADAS systems will have a profound impact on the independent repair industry in Australia not only in terms of the steep learning curves involved but also in terms of the large investments in tools and equipment many independent workshops will have to make to remain competitive and profitable over the long term.

There will also be an impact on our customers in terms of increased maintenance costs, and it will be up to us, as independent operators to educate our customers on why relatively simple procedures like tyre changes, wheel alignments, and even basic suspension repairs now come with “diagnostic and calibration” charges.

The truth of the matter is that the more complex ADAS systems become, the more important it becomes to keep these systems properly calibrated to ensure their correct operation at all times, so are you up to the challenge of mastering the next generation of ADAS systems?